When I first joined Functional AI, I thought building a voice AI agent was mostly a technical challenge.

Pick the right APIs, wire the system together, make it sound natural - done.

Three missions later, I realised voice AI isn’t hard because of speech recognition or LLMs.

It’s hard because you’re designing behaviour that enters someone’s life uninvited.

Each mission forced me to confront a different question - not about technology, but about how humans experience AI. What follows isn’t a list of what we built, but how my thinking evolved as we tried to answer those questions.

Mission 1 - NOVA

Mission 1 Objective

Our goal was to build a hands-free way for busy professionals to stay informed - delivering essential news and market updates in the morning, without requiring screens, scrolling, or an hour of passive consumption.

Why this mattered

I wish I had my own personalized, interactive podcast that understands my emotions every day to start the morning.

Host of AI Internship Project · Founder of Functional AI Partners

Director, DCC Group · Former Investment Banking (NY) · SoftBank / GE (JP)

The Tension

What does a “good morning” from AI actually mean?

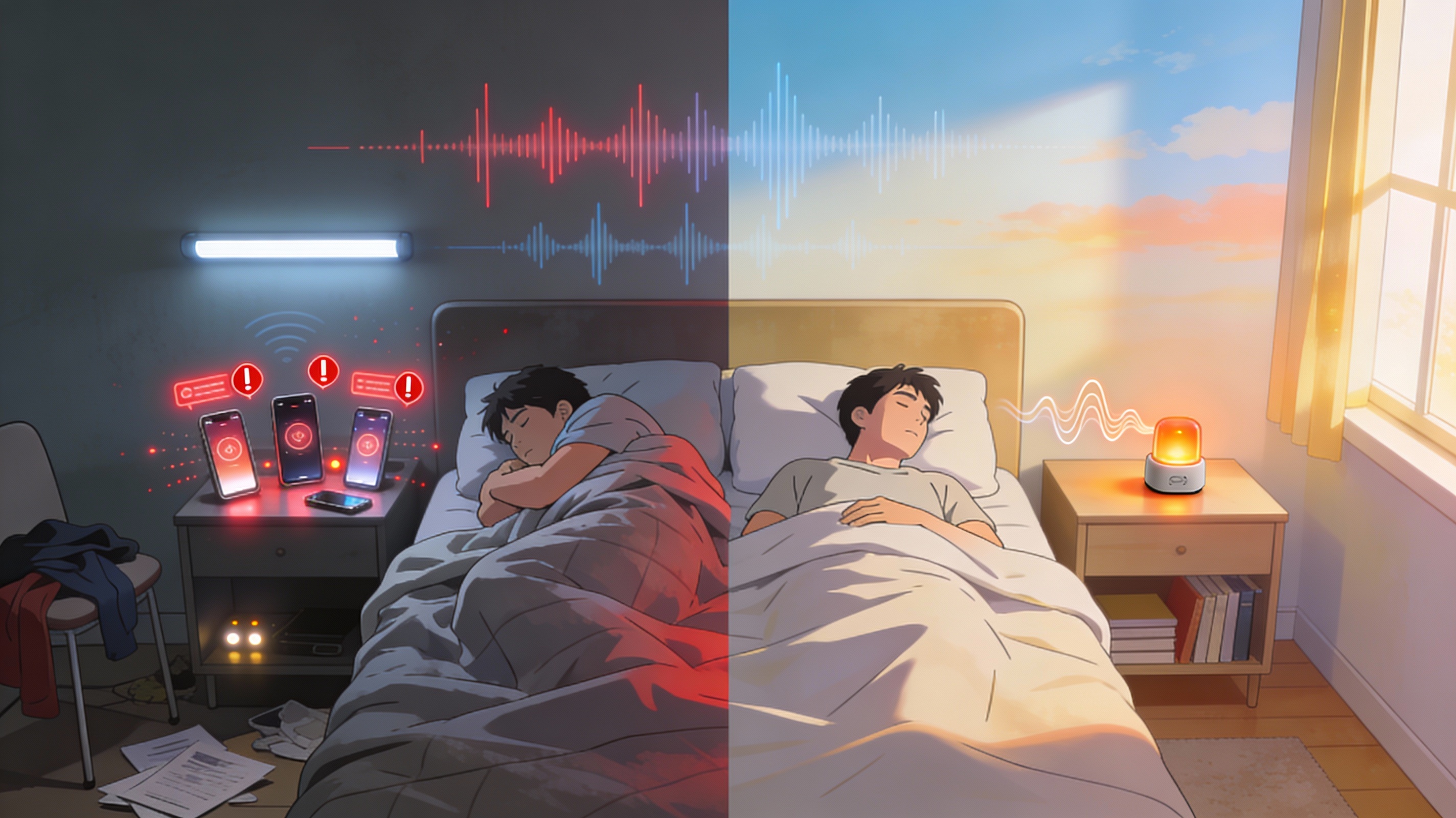

When we started Mission 1, the pitch sounded perfect: a voice AI that calls you in the morning and briefs you on the news. Simple. Helpful. Natural.

But when we actually built it, something felt deeply off.

I’d lie awake thinking about what happens when someone’s alarm goes off. They’re not rested. They’re not ready. They’re definitely not looking for more information - their mind is already spinning with the day ahead. Inboxes. Notifications. Meetings. Decisions.

And here we were, about to add another voice demanding their attention.

That’s when it hit me: we weren’t building a helpful product.

We were building an intrusion.

How My Thinking Shifted

Instead of asking “what should NOVA say?”, I started asking “what should NOVA not say?”

Instead of packing in more value, we asked how we could respect someone’s most vulnerable moment of the day.

Calling someone in the morning isn’t just a feature - it’s an act of responsibility. We had to earn the right to be there.

The Design Choice We Made

We committed to restraint.

We pre-processed information before the call so the conversation felt calm instead of overwhelming. We used emotion analysis not to manipulate, but to soften delivery. Follow-ups happened after the call, so the live interaction stayed light and brief.

The voice had to feel dependable first, helpful second.

I stopped seeing voice AI as a chatbot and started seeing it as a scheduled service - something that had to show up on time, say what it promised, and leave you better than it found you.

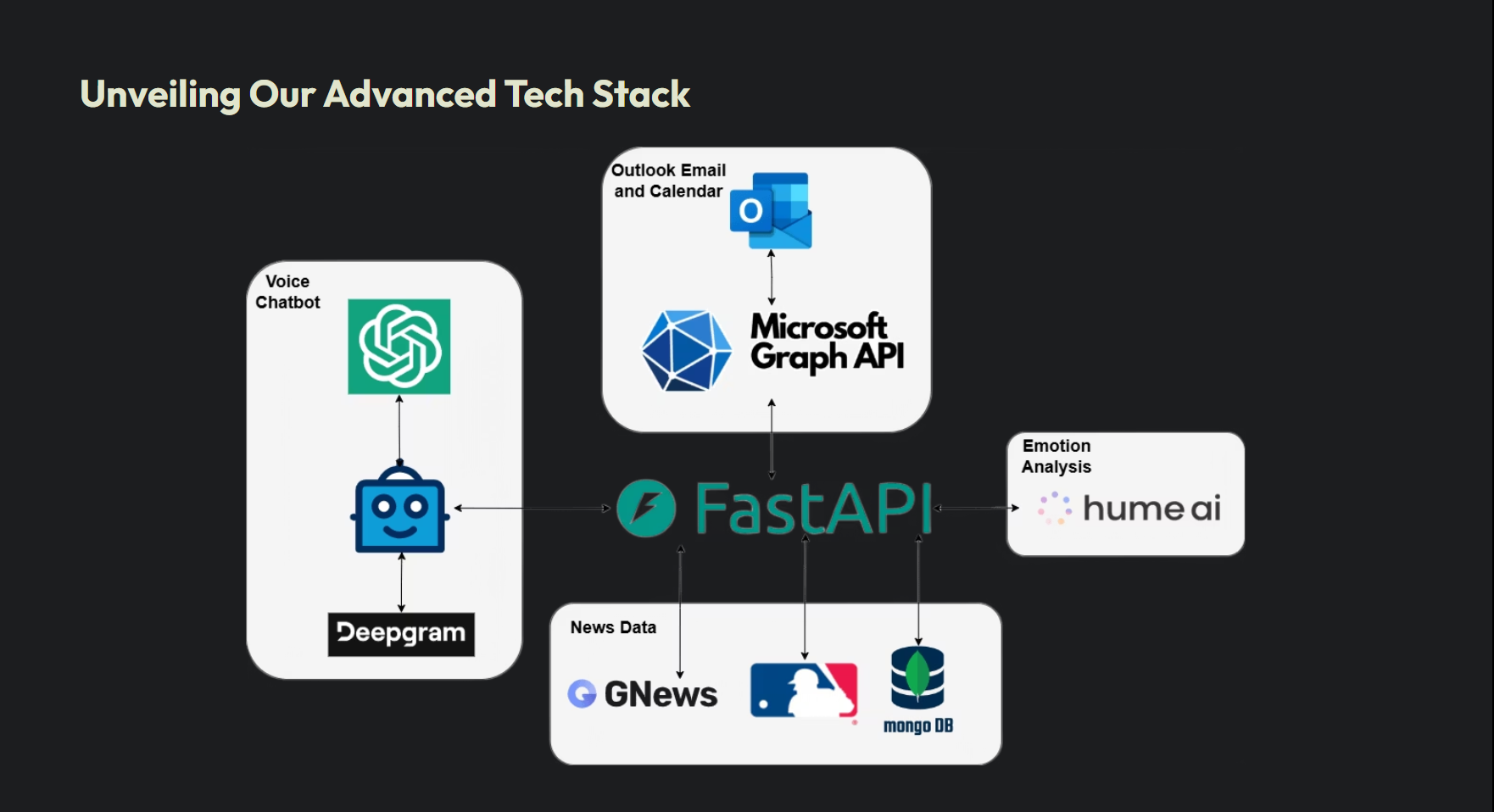

Tech Stack Overview

This system was intentionally designed to front-load intelligence and minimise real-time cognitive load during the call.

What This Mission Changed for Me

Voice AI lives or dies by how it makes people feel in the first 10 seconds.

Intelligence means nothing if the system doesn’t feel dependable.

Mission 2 - StudyMate

Mission 2 Objective

We set out to build an interactive AI companion that could grow alongside a user over time - exploring whether long-term, meaningful connections with AI could mirror the continuity and care found in human relationships.

The Tension

What if an AI wasn’t an assistant - but something closer to a presence?

Mission 2 felt uncomfortable in a different way.

We weren’t optimising for productivity anymore. We were asking something harder:

Can an AI be emotionally meaningful without becoming invasive or unhealthy?

At first, we played it safe. Respond when spoken to. Give advice when asked. Stay useful and stay out of the way.

But it felt hollow.

How My Thinking Shifted

I kept thinking about what companionship actually is.

A real friend doesn’t wait to be useful. They notice when you’re quiet. They remember what you mentioned weeks ago. They check in - not because they have advice, but because they care.

That led to a deeper tension:

Can an AI feel consistent over time - or does it always reset to “day one”?

If StudyMate was going to matter, it couldn’t just store information.

It needed memory with emotional continuity.

The Design Choice We Made

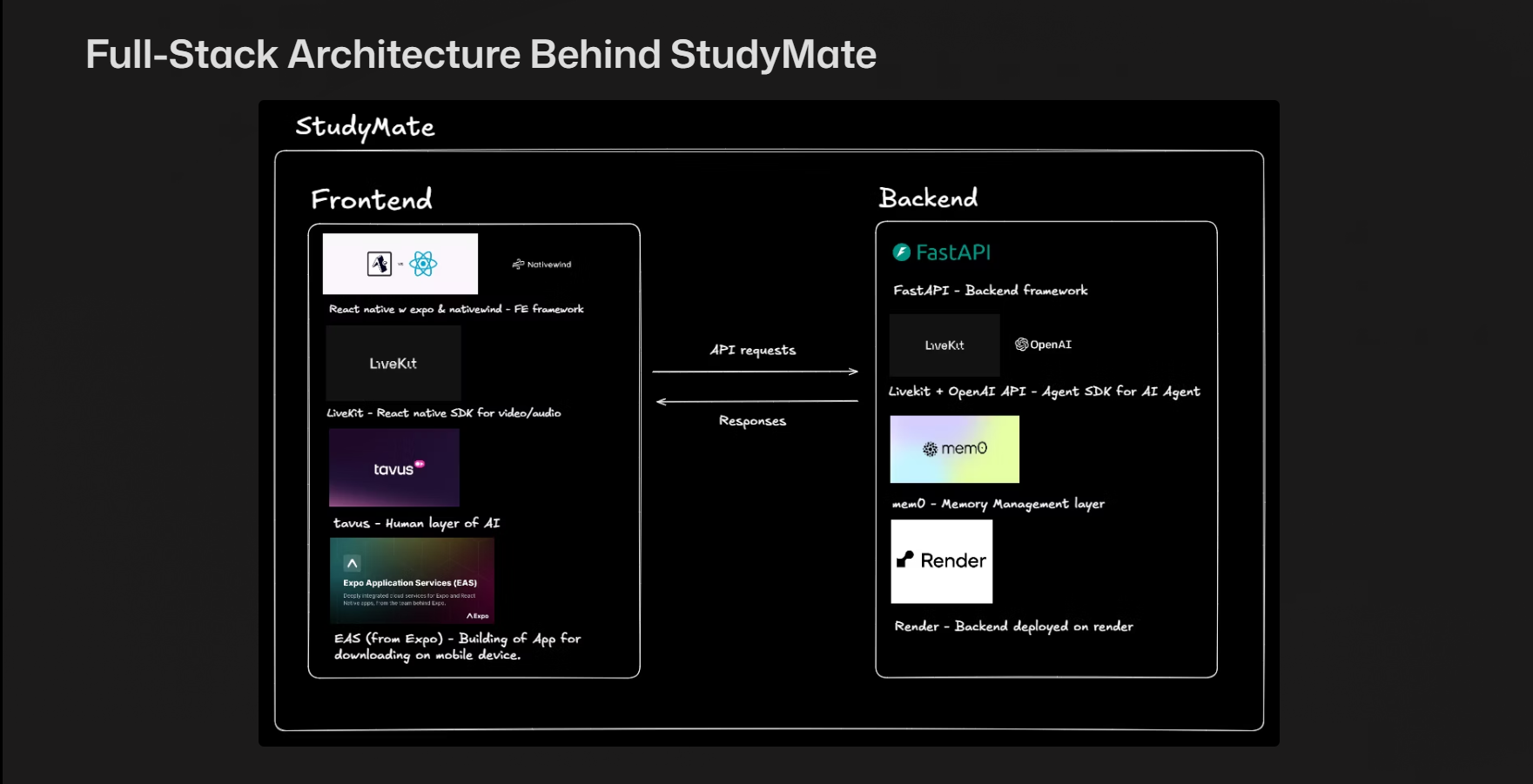

We treated memory as a design philosophy, not a feature.

Using a vector-based memory system, we ensured conversations didn’t restart from scratch. Context carried forward - not just facts, but emotional texture.

We also became deliberate about when the AI reached out. Calendar syncing wasn’t about productivity hacks; it was about respect. A nudge only feels caring when the timing makes sense.

The friend feature came from the same principle. StudyMate wasn’t meant to replace human connection. By sharing memory between users and suggesting conversation prompts, we nudged connection outward, not inward.

Tech Stack Overview

This architecture was intentionally stateful - optimised for long-term relationships rather than one-off interactions.

StudyMate in Action

What This Mission Changed for Me

Emotional AI isn’t about sounding empathetic.

It’s about consistency, timing, and knowing when silence is more caring than words.

Mission 3 - AIRI

Mission 3 Objective

The aim was to build an AI emcee that could co-host live sessions - supporting speakers by asking the right questions at the right moments, and helping presentations flow more smoothly without taking control.

Why this mattered

I don't want AI to replace an emcee. I want it to ask good questions, at the right time, so the webinar becomes more engaging.

Host of AI Internship Project · Founder of Functional AI Partners

Director, DCC Group · Former Investment Banking (NY) · SoftBank / GE (JP)

The Tension

If an AI joins a live conversation, what gives it the right to speak?

Mission 3 was the most humbling.

“AI emcee” sounded impressive - until we asked a harder question: if the goal is to replace the speaker, why not just generate an AI voiceover and send the recording to participants?

That’s when the real intent became clear. The goal was never substitution.

In human settings, an emcee doesn’t replace the speaker - they facilitate the flow. Like at the Oscars, a good host knows when to step in, when to step back, and how to guide the experience without becoming the focus.

The more we prototyped, the clearer it became: we weren’t trying to make AI talk more.

We were trying to make the presentation flow better.

How My Thinking Shifted

I stopped asking: Can an AI host a webinar?

And started asking: What does “hosting” actually mean in a human context?

A good emcee doesn’t dominate. They listen. They sense momentum. They ask the questions the audience wishes they’d asked themselves.

Hosting isn’t about talking more.

It’s about intervening well.

The Design Choice We Made

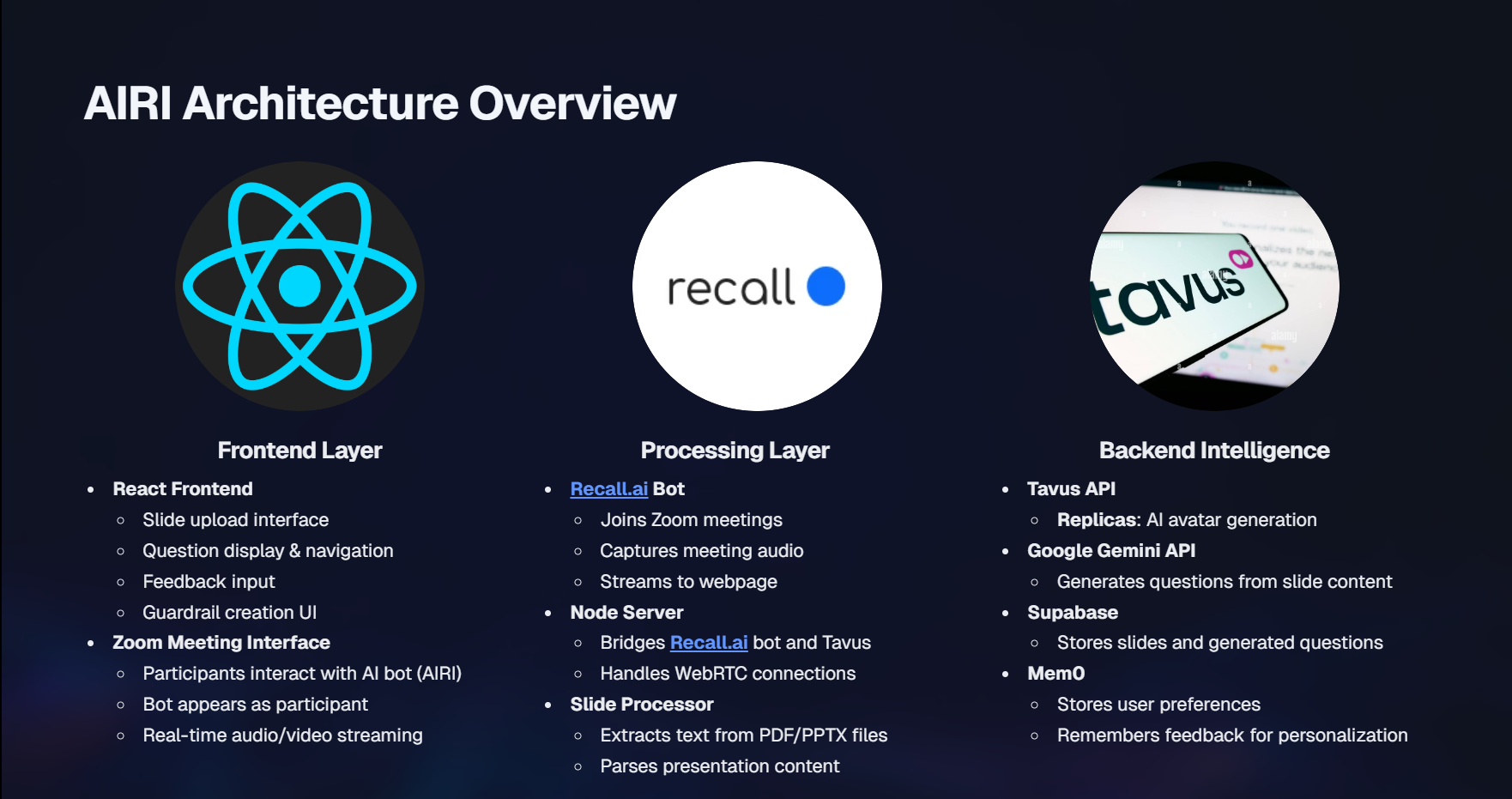

AIRI doesn’t improvise endlessly.

It relies on pre-processed slides, trigger phrases, and carefully prepared questions. When a trigger appears, it waits. It doesn’t interrupt. Only after the speaker finishes does it step in.

The hardest engineering challenge wasn’t making AIRI speak intelligently.

It was teaching it when to stay quiet.

That’s the inverse of how most AI systems are designed.

Tech Stack Overview

This system prioritises listening, timing, and social awareness over raw output generation.

AIRI in Action

What This Mission Changed for Me

This mission taught me that context matters more than capability.

Before designing AIRI, we had to step back and observe how webinars actually work - how emcees guide flow, surface the right questions, and intervene only when it improves the experience.

The breakthrough wasn’t adding more intelligence.

It was understanding the setting AIRI was entering, and designing its behaviour to fit naturally within it.

Looking Back

What surprised me most is that all three missions taught me the same lesson from different angles:

- NOVA taught me how to make AI emotionally aware - but only in service of restraint, not domination.

- StudyMate revealed the nuance of building relationships between humans and AI - consistency and timing matter more than capability.

- AIRI was the breakthrough: we only understood what was needed once we stopped thinking about technology and started observing how webinars actually work.

I no longer think of AI systems as features or products.

I think of them as behaviours introduced into human routines.

That framing - more than any individual tech stack - is what I’m taking forward from Functional AI Partners.

Lastly, a huge shoutout to Functional AI Partners for giving me such an amazing learning experience over my 6 months internship programme. Thank you for the opportunity.

Related Articles

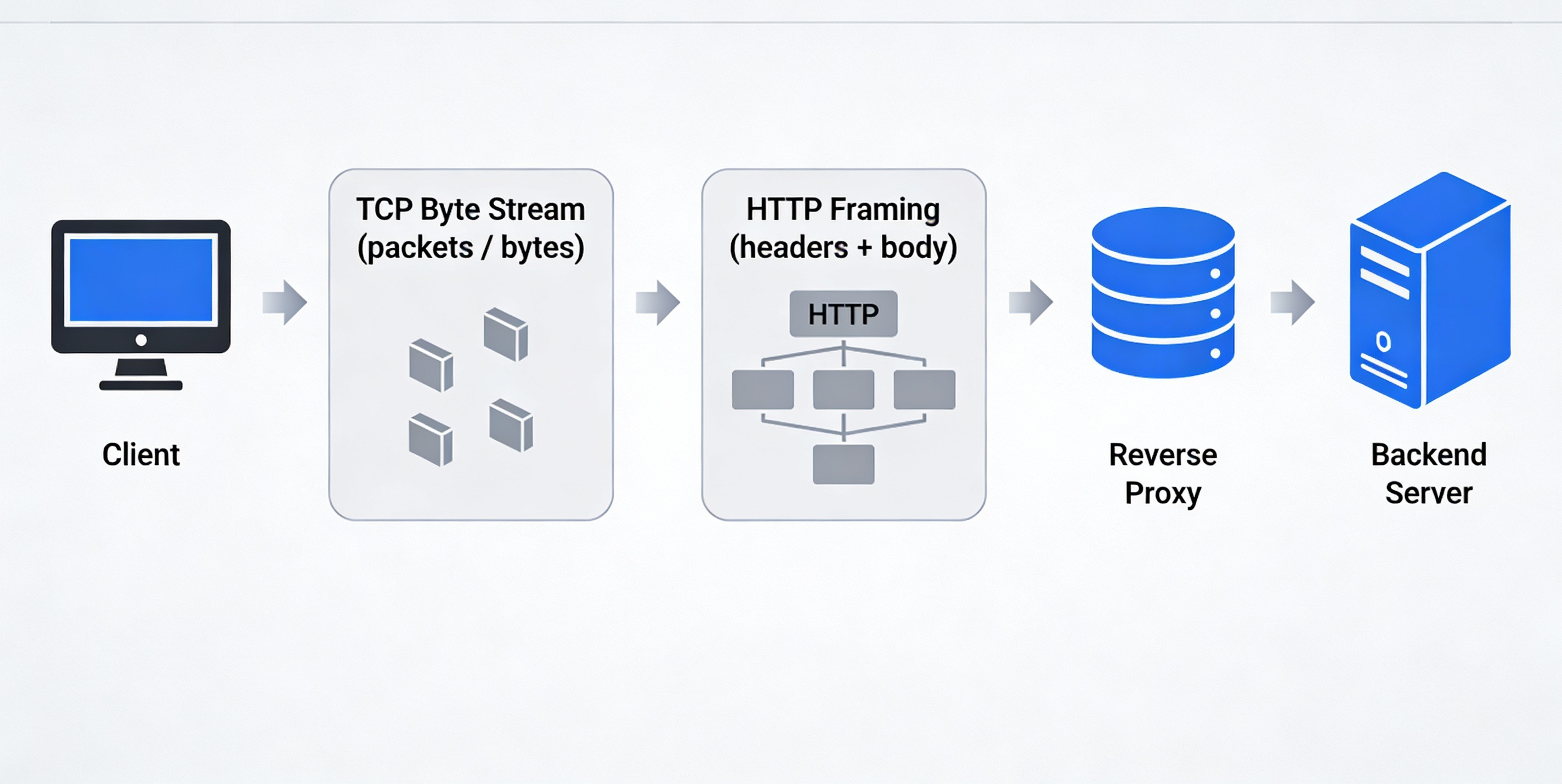

Attempting to Build an HTTP Server from Scratch (No Frameworks)

Understanding HTTP from first principles by building a minimal server and reverse proxy on raw TCP—request framing, correctness, concurrency, and guards.

Building a Git Clone from Scratch (and Finally Understanding Git)

A deep dive into Git internals by building a tiny Git clone from first principles - blobs, trees, commits, refs, and checkout.

From Lines of Code to Wires and Sensors

A reflection on building Inklytics at the SUTD SEVEN MVP Hackathon - a pen with sensors to detect exam anxiety tremors.